Beyond the Floor Plan

Matthew Fornaciari

mattforni@gmail.com

Framing

This is not a lecture. This is a brown bag.

Ask Me Anything

Understanding is the Goal

Act I

Start at the Beginning

The Existing App

Capture quality matters. The app already knows this.

The Output

Impressive! This works for a lot of homes.

What About Height?

But we don't live in a flat world ...

Why Height Matters

Two homes with identical floor plans can have wildly different energy needs.

| 8 ft ceilings | → | Standard volume |

| 25 ft ceilings | → | 3x the air to condition |

The volume changes everything for HVAC sizing.

The Challenge

How do we take this 2D representation

and expand it into 3D space?

ADR-001: Three Stage Pipeline

Considered six aspects:

Capture, Reconstruction, Semantic Extraction, Visualization, Measurement, Integration

Simplified to three stages:

Capture, Model, Analyze

Act II

Understanding the Constraints

The Gold Standard

Light Detection and Ranging

Emits infrared pulses and measures how long they take to return. Direct distance measurement.

Apple's RoomPlan API + LiDAR would solve this easily.

The Accessibility Problem

LiDAR is iPhone Pro models only.

| iPhone Pro users | ~30-40% of iPhone base |

| iPhone market share | ~55% US, lower globally |

| Android with LiDAR | Rare |

| Total with LiDAR | ~20-30% of users |

Validation

"Focusing on non-LiDAR is correct — that's a foundational design decision for us."

"Better spatial data for sizing would be the priority. The user is an Advisor."

— Justin Holmes

| Constraint | Non-LiDAR first — serve the 70% |

| Priorities |

1. Semantic representation for suppliers 2. Faster feedback loops 3. Photorealistic visualization |

ADR-002: Non-LiDAR First

Solve for the larger user base.

LiDAR becomes an enhancement when available.

LiDAR → Non-LiDAR

Act III

Exploring the Landscape

The Modeling Stage

| SfM | → | Densify | → | Extract |

| Structure from Motion | Fill the gaps | Get geometry | ||

| Find features, match frames, estimate cameras | Multi-View Stereo (MVS) for geometry, Splats for visuals | Turn points into usable surfaces | ||

| Output: Sparse cloud (thousands of points) |

Output: Dense cloud (millions of points) |

Output: Mesh or planes (usable geometry) |

||

| COLMAP | OpenSplat (Metal) | Poisson, RANSAC, Percentile | ||

| Meshroom, Polycam | COLMAP, OpenMVS (CUDA) | SuGaR, GS2Mesh (CUDA) | ||

| KIRI, Polycam, gsplat |

Build vs Buy

Classic question. I explored both tracks in parallel.

| COLMAP | SfM, sparse reconstruction |

| OpenSplat | Gaussian splatting (Metal) |

| OpenMVS | Dense MVS (CUDA) |

| gsplat | Gaussian splatting (CUDA) |

| Polycam | Full photogrammetry |

| KIRI Engine | Splats only, $500 min API |

| Scaniverse | LiDAR scanning, no API |

| Luma AI | NeRF, slow processing |

The CUDA Wall

NVIDIA's parallel computing platform

Almost everything beyond sparse SfM requires it.

I tried to keep things local:

- OpenMVS: needs CUDA

- SuGaR: needs CUDA

- COLMAP dense: needs CUDA

CUDA does not run on Mac.

ADR-003: Focus on Concepts

Setting up NVIDIA cloud infrastructure is an orthogonal problem.

AWS EC2 with CUDA, Lambda Labs, etc. all viable for production.

But that's DevOps work, not the problem I'm demonstrating.

Production would use cloud GPU.

Prototype stays focused on pipeline concepts.

The Critical Distinction

After SfM, two paths diverge:

| Densification | Optimizes for | Good for |

|---|---|---|

| MVS | Geometric accuracy | Measurement |

| Gaussian Splatting | Visual fidelity | Rendering |

This maps to the dual audiences:

Suppliers/Advisors need geometry.

Consumers want beautiful visuals.

What Are Gaussian Splats?

A scene represented as millions of fuzzy ellipsoids.

Each ellipsoid has position, size, rotation, color, and opacity. Rendered by "splatting" them onto the screen.

Why they're popular:

- Photorealistic renders from any angle

- Fast to train (minutes, not hours)

- Real-time rendering possible

112K ellipsoids = photorealistic room

The Only Path Forward

With CUDA tools off the table, the only open source path was gaussian splats.

- COLMAP gave me sparse reconstruction

- Built OpenSplat with Metal support on Mac

The renders were ... compelling.

OpenSplat Experiments

n=2000, d=1, ssim=0.2

112K gaussians

n=2000, d=2, ssim=0.1

90K gaussians

n=3000, d=1, ssim=0.2

368K gaussians

n=5000, d=1, ssim=0.2

790K gaussians

| n | iterations (training rounds) |

| d | downscale factor (1 = full res, 2 = half) |

| ssim | structural similarity weight (higher = sharper edges) |

Key insight: more iterations ≠ better quality.

Extraction

Tried to extract geometry via Poisson surface reconstruction.

Principal Component Analysis (PCA) normals

Estimate normals from neighboring points

Quaternion orientation normals

Interpret ellipsoid rotation as surface direction

Both attempts: garbage. Why?

ADR-004: Splats Optimize for Appearance

Gaussian splats optimize for appearance, not geometry.

The ellipsoid centers float wherever they need to be to look good when rendered. They don't lie on surfaces.

Beauty ≠ Truth

Beautiful renders don't stop the trucks from rolling. They don't size equipment.

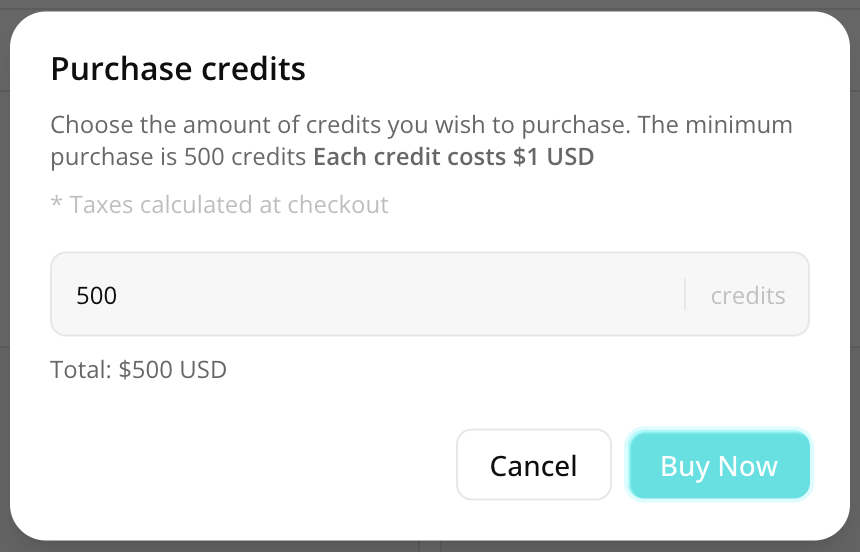

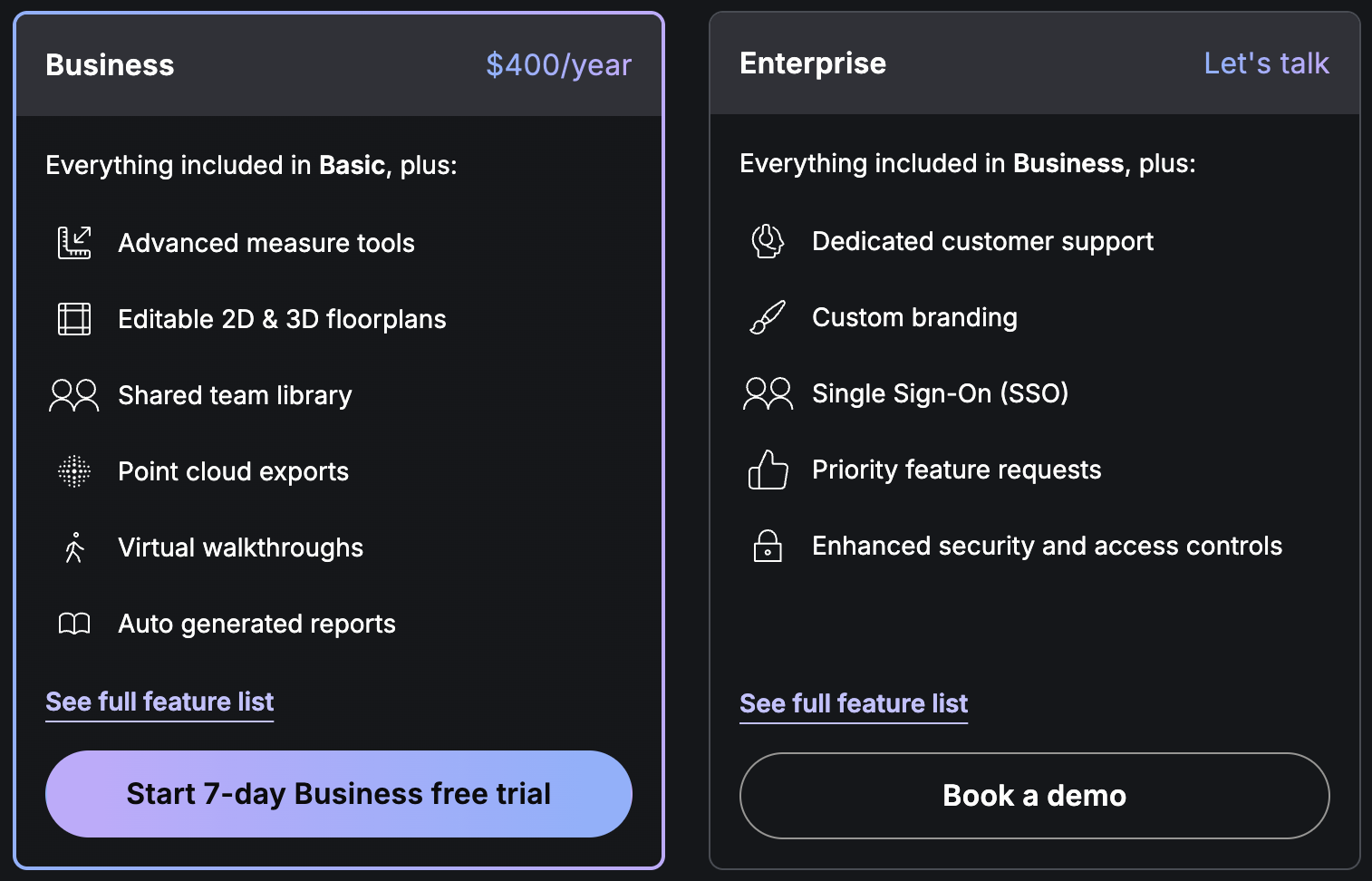

Commercial Track: Back to Densify

Turned to commercial tools for reliable dense reconstruction.

KIRI Engine

Good gaussian splats, but inconsistent geometry. Struggled to reliably extract surfaces.

$500 minimum top-up

Polycam

Decent splats, but excels at reliable dense point clouds. Full photogrammetry pipeline.

$400/year Business

Polycam: 1.1M surface-bound points we can actually use.

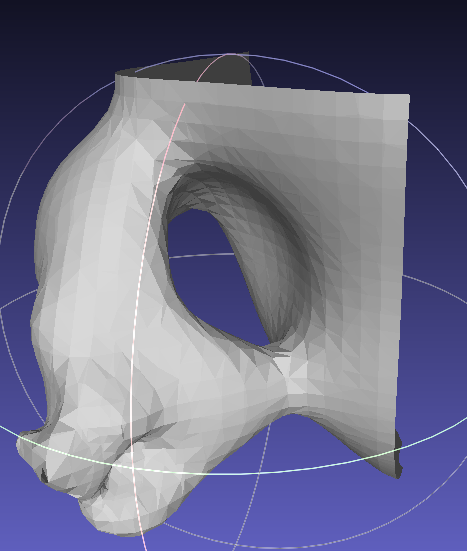

Dense Point Cloud

1,120,180 surface-bound points

Polycam's photogrammetry gives us real geometry.

Points that actually lie on surfaces. Not optimized for appearance.

Now we can extract structure.

Poisson Mesh Extraction

24,174 triangles

With real surface points, Poisson reconstruction works.

Captures every bump, curve, and imperfection in the room.

Not that useful for visualization.

Probably overkill for Manual J.

What is it we actually need?

The Razor

Dense cloud:

1.1Mpoints

Poisson mesh:

24Ktriangles

Manual J needs:

6planes

Floor. Ceiling. Four walls.

That's it. That's all we need for volume calculation.

Plane Detection

| Method | Approach | Dimensions | Area | Planes |

|---|---|---|---|---|

| RANSAC | Iterative plane fitting | 26.4 × 27.1 × 22.4 ft | 716 sqft | 5 of 6 |

| Percentile | Find the edges | 23.5 × 24.6 × 22.1 ft | 578 sqft | 6 of 6 |

Manhattan World: Walls perpendicular, floors horizontal, aligned to axes.

Floor: lowest 2% of Z |

Ceiling: highest 2% of Z | → 6 planes → 24 vertices

Walls: X/Y boundaries |Simpler. More complete. No iterative fitting.

The Output

6 planes

{

"room": {

"width_ft": 23.5,

"depth_ft": 24.6,

"height_ft": 22.1,

"area_sqft": 578.0

},

"calibration": {

"method": "reference_height",

"reference_height": 25.0,

"scale_factor": 1.0

}

}Circle Back: The 25' Ceilings

expected

detected

Vaulted ceilings slope. Percentile bounds find the average, not the peak.

Is it perfect? No. Is it close? Pretty damn close.

For Manual J with 15-25% built-in tolerance? Good enough.

The Punchline

points

planes

The simplest path that actually works.

Act IV

Outcomes & Future Work

What This Means for Zero Homes

What we achieved:

- Volume, not just footprint

- Same video input

- Non-LiDAR by design

A few days of exploration. Proof of concept, not production.

What we don't have yet:

- Windows

- Doors

- Architectural features

For better equipment sizing, we need more semantic fidelity.

The Next Challenge

Semantic segmentation to identify windows, doors, features.

frames

find surfaces

wall, window, door

2D → 3D

walls + windows + doors

SAM 2 is one promising tool. Early experiments show it can track surfaces across video.

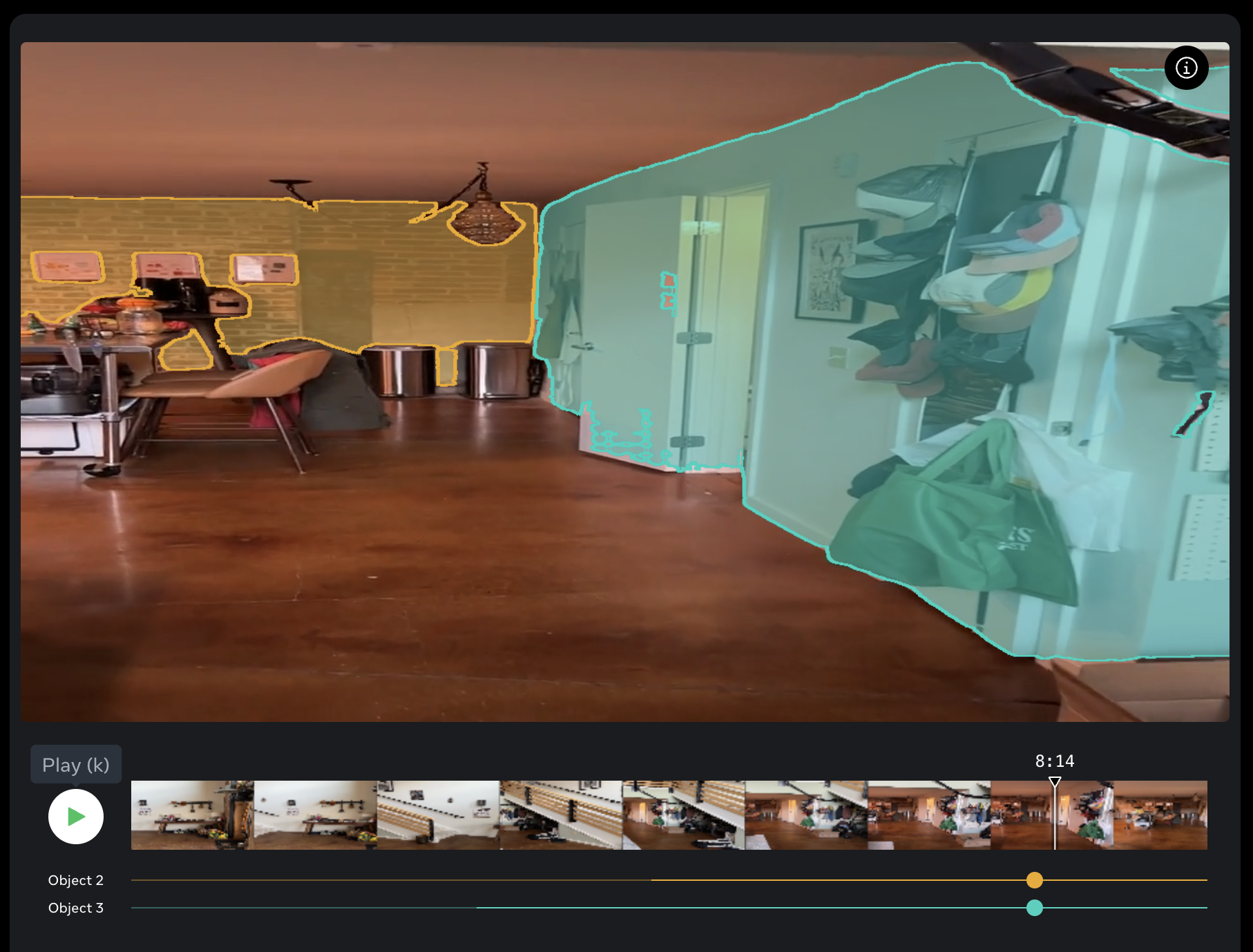

SAM 2: Early Experiments

Walls tracked across video. Windows visible (over-segmented into panes).

Needs tuning, but the foundation is there.

Recommended Next Steps

| 1. LiDAR + RoomPlan | For users who have it, use the gold standard. ICP likely has newer iPhones. |

| 2. Semantic Segmentation | Evaluate SAM 2, other tools for identifying windows, doors, features from video. |

| 3. Polycam Partnership | Could abstract the pipeline. Trade-off: vendor dependency. Enterprise inquiry pending. |

| 4. CUDA Methods | SuGaR, GS2Mesh, Dense MVS for higher fidelity non-LiDAR. Cloud GPU when ready. |

Add complexity only when the problem demands it.

Beyond the Floor Plan

Thank you.

Matthew Fornaciari

mattforni@gmail.com